Submission

A complete submission includes a download link to Docker tar file and a short paper.

The docker should be saved with (please do not use capital letters in the teamname)

docker save teamname:latest -o teamname.tar.gz

The submitted Docker should be named as `teamname.tar.gz`. The testing cases are evaluated one-by-one by runing the Docker with:

docker image load < teamname.tar.gz

docker container run --gpus "device=1" --name teamname --rm -v $PWD/inputs/:/workspace/inputs/ -v $PWD/teamname_outputs/:/workspace/outputs/ teamname:latest /bin/bash -c "sh predict.sh"

We highly recommend participants checking the official evaluation code at https://github.com/JunMa11/FLARE2021.

(Only one GPU is allowed to use during the inference phase and we have specified the GPU in the docker command.

Thus, please do not specify the GPU via CUDA_VISIBLE_DEVICES in the predict.sh file.)

NVIDIA supports a DGX-1 for the evaluation.

Validation submission guideline

- Directly upload your validation segmentation results and short paper on the grand-challenge.

- Note. We allow at most five-time submissions on the validation set.

Testing submission guideline

- Email a download link to your Docker tar file and a short paper to FLARE21@aliyun.com.

- Note: We allow only once submission on the testing set for each short paper.

Evaluation Metrics

- Dice Similarity Coefficient

- Normalized Surface Dice

- Running time

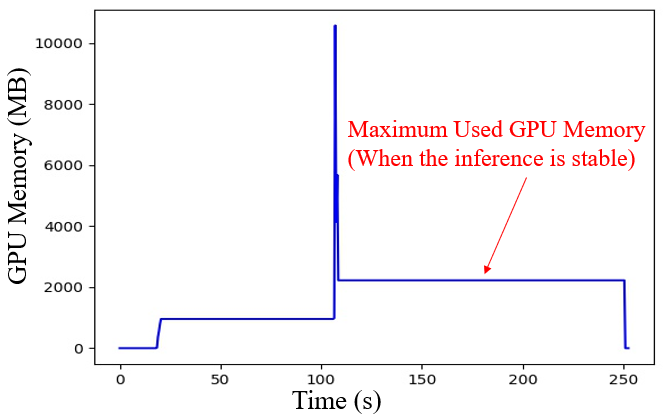

- Maximum used GPU memory (When the inference is stable)

Evaluation code and video demo have been released.

Ranking Scheme

Our ranking scheme is based on "rank then average" rather than "average then rank". It includes the following three steps:

- Step 1. Compute the four segmentation metrics (average DSC and NSD of four organs, runtime, maximum used GPU memory) for each testing case.

- Step 2. Rank participant for each of the 100 testing cases; Each participant will have 100*4 rankings.

- Step 3. Average all these rankings and then normalize by the number of teams.